Horses wear headgear in races to improve their racing. It may be to block vision, or to hold the tongue in position to prevent it blocking an airway, or any number of other reasons. There are seven types of headgear in use in horse racing, and these are: It may improve the racing for the…

Get access to the PR and VDW ratings and find top-rated winners every day.

Find out which horse has been tipped by the most newspaper tipsters each day.

Stop doing all the hard work yourself and get access to daily tips.

Find out which horse is predicted to be the fastest horse.

Track horses, trainers and jockeys, get instant alerts when they're running and see how profitable you are using them.

Stop working out the results of your bets by hand. Simply add your bets each day and let us automatically show you the results.

Pep's Big Race Tips

- Profit: -10.09

- Tips to run: 0

- Win Rate: 28.13%

- ROI: -31.52%

Harry G Racing Tips

- Profit: -11.92

- Tips to run: 0

- Win Rate: 15.38%

- ROI: -45.84%

Stratford - 12:25

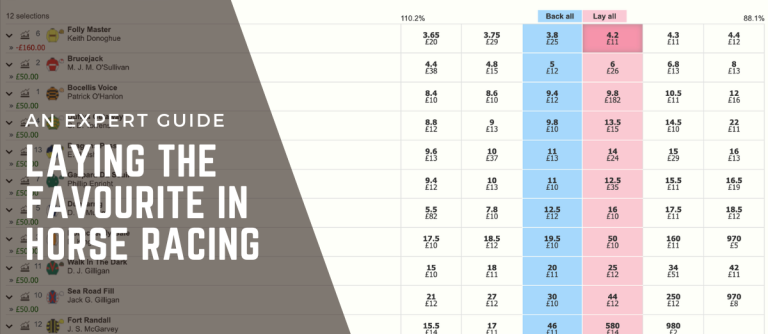

Introduction Welcome to this article on laying the favourites in horse racing. This guide has taken multiple people hundreds of hours to compile. Whilst we are only showing some of the statistics found through the research process, there was a huge amount of data analysis to find these. If you’re interested in analysing this data…

Also known as speed figures, speed ratings are numbers used to denote the ability of a horse on a given day. There are different types of ratings and calculations which are all considered to be speed ratings, just different versions. For a long time, the belief was that speed ratings were mainly useful in American…

There are two ratings available on the Race Advisor website, the PR (Power Rating) rating and the VDW (Van Der Wheil) rating. To access these ratings for every race is just £0.87 per day, and you can access them on a day-by-day basis, with no subscription. The ratings cost is capped at £9.57 in any…

Humankind has engaged in horse racing for millennia. There are records of chariot racing dating back to ancient times, with the sport a feature of the Greek Olympics in the 7th century BC. While UK racecourses such as Newmarket were established in the 1600s, it wasn’t until the second half of the 1700s that horse…

If you’re new to horse racing, it’s easy to get overwhelmed by the different terminology. For example, the classification system, designed to ensure more evenly contested races, is a source of genuine bemusement for many beginners. According to the British Horseracing Authority, there are approximately 14,000 horses in training in the United Kingdom. As you…

If you want to feel good, you need to keep laughing. Which is why we’ve gone out and found the funniest horse racing jokes, one-liners, puns and quotes from the far corners of the internet to keep you entertained. If you love a bad joke, then you’ll love this post! Funniest horse racing jokes and…

What is ‘nap’ in horse racing? A ‘nap’ in horse racing is the single horse which a tipster (a person who provides betting advice) considers to be the best bet of the day. Is horse racing fixed? There is always a lot of concern as to whether horse racing is fixed. This is most likely…